Introduction

Autonomous navigation remains one of the most challenging yet promising frontiers in

robotics and artificial intelligence. This project presents a complete autonomous navigation

system implemented on a Raspberry Pi-powered car, capable of object detection, environmental

mapping, optimal path planning, and real-time navigation control. This project demonstrates

how an embeded computer with limited computation power and sensors can be leveraged to

create

a miniature self-driving vehicle that

can detect obstacles, recognize stop signs, dynamically remap its environment, and plot

alternative routes to reach target destinations.

The system architecture divides the autonomous driving challenge into four distinct but

interconnected tasks: Object Detection, Mapping, Planning, and Navigation. Each component

plays a crucial role in the overall workflow: the car initializes with a clean map, plans an

optimal path, detects obstacles with ultrasonic sensors, remaps the environment when

necessary, identifies stop signs with computer vision, and navigates to predefined waypoints

while avoiding collisions.

Approaches

The overall autonomous system workflow is:

- Initialization : The system starts with an empty map and computes an initial

path using user-defined start and end points.

- Continuous Monitoring : As the car follows the planned path, it continuously

monitors for obstacles with the ultrasonic sensor and for stop signs with the camera.

- Dynamic Adaptation : When an obstacle is detected, the car stops, updates its

map, computes a new path, and resumes navigation.

- Multiple Destinations : The system can handle sequential navigation to

multiple destinations, automatically switching to the next goal point after reaching the

current one.

Mapping System

The mapping component transforms sensor data into a usable representation of the environment through a series of processes:

> 1.Map Initialization

A grid-based square map (25×25) is created, with each cell initialized to 0 to represent free space. Each grid cell corresponds to approximately 11.4 cm in the real world.

> 2.Obstacle Detection

The system uses an ultrasonic sensor to scan the environment by measuring distances at angles ranging from -60° to 60° in 5° increments. To mitigate sensor noise, five distance readings are taken at each angle, and the median value is used.

> 3.Coordinate Transformation

Using the car's current position and orientation, trigonometric calculations convert ultrasonic sensor readings into Cartesian coordinates on the occupancy grid. Detected obstacles within a valid range (50 cm) are marked as 1 on the grid.

> 4.Point Interpolation

To address sparse detection points, the system connects nearby points that likely belong to the same obstacle. Points are considered connected if the angle difference is less than 10 degrees and the Euclidean distance is less than 10 cm. The Bresenham line algorithm fills gaps between connected points.

> 5.Obstacle Expansion

Binary dilation operations are applied to the detected obstacles to account for the car's physical dimensions and sensor inaccuracies, effectively enlarging the obstacle areas on the map.

> 6.Map Updating

The system merges newly detected obstacles with previously scanned data using bitwise OR operations, ensuring that all obstacles remain in the final map.

Object Detection

The object detection system leverages computer vision technologies to identify stop signs and other relevant objects:

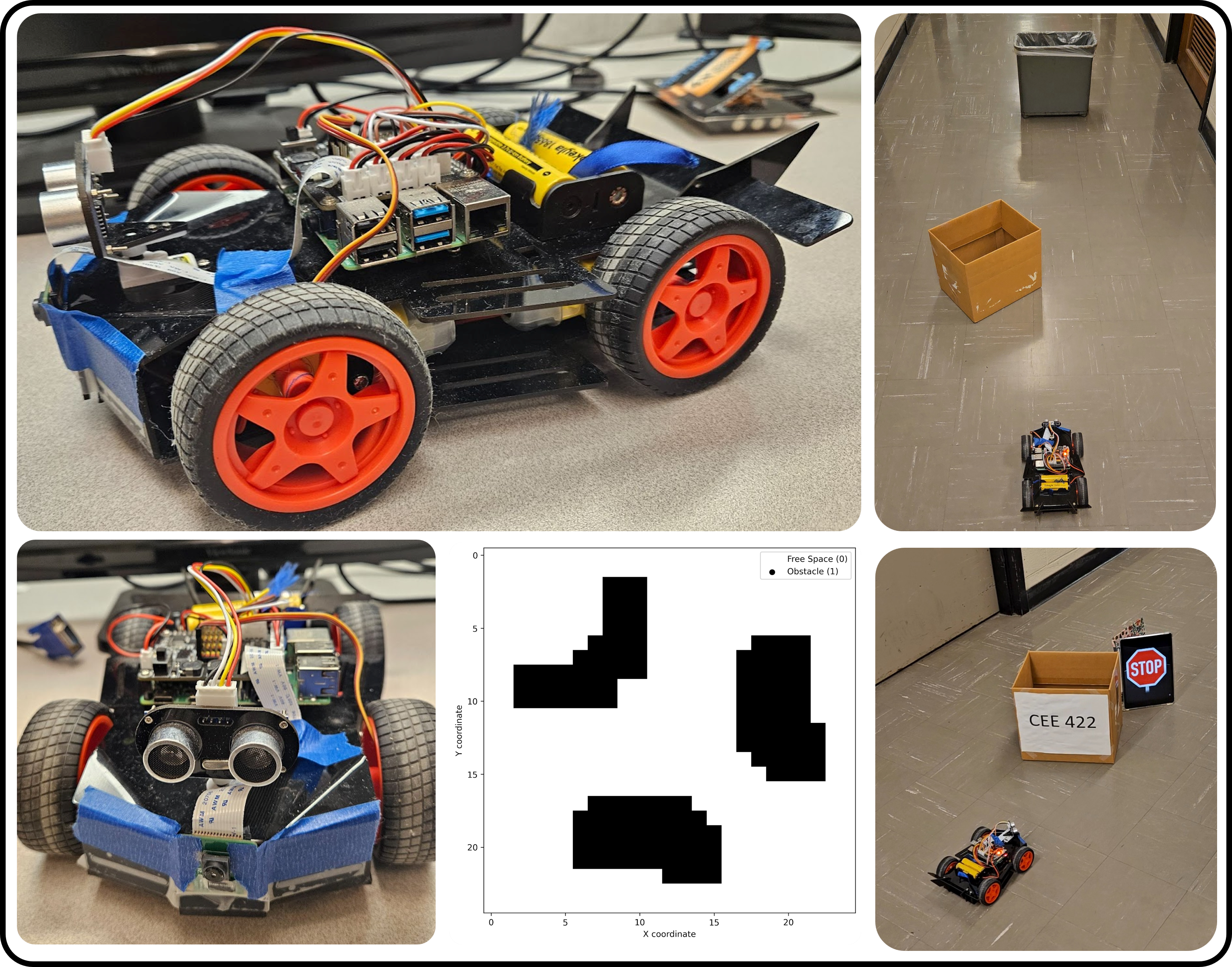

> 1.Hardware Setup

Images are captured using the Raspberry Pi camera (Picamera2) at a resolution of 640×480 pixels, maintaining a frame rate of 8 frames per second for real-time processing.

> 2.Processing Pipeline

Captured images are processed using OpenCV and then analyzed with TensorFlow Lite running an EfficientDet-Lite0 model.

> 3.Stop Sign Detection

When a stop sign is detected, a 'stop_needed' flag is set in the controller, forcing the car to halt for 5 seconds before continuing navigation.

Path Planning

The planning component determines optimal paths using the A* algorithm:

> 1.Heuristic Function

Euclidean distance serves as the heuristic function, estimating the cost from any position to the goal and guiding the search process.

> 2.Path Planning

The A* algorithm prioritizes exploration of the most promising nodes first, efficiently finding the shortest path while avoiding obstacles.

> 3.Dynamic Replanning

When obstacles are detected, the system replans the route based on the updated map, ensuring adaptation to environmental changes.

Control System

The navigation controller translates planned paths into physical movements:

> 1.Parameter Configuration

Key control parameters, including PWM settings and turning times, are stored in a configuration file for easy adjustment during testing.

> 2.Motion Control

The system includes functions for moving forward and turning left or right by 45 degrees.

> 3.Waypoint Navigation

For each waypoint in the planned path, the controller calculates the desired heading angle using the atan2 function and determines the steering angle as the difference between the desired heading and the car's current orientation.

> 4.Obstacle Avoidance

The front-facing ultrasonic sensor continuously monitors for obstacles. If an obstacle is detected within 40 cm, the car stops and rescans the environment from -60° to 60°.

> 5.State Estimation

The system updates the car's position by assuming perfect execution of movement commands, with the arrived waypoint designated as the car's new location.

Results

The autonomous system was successfully tested in three distinct scenarios:

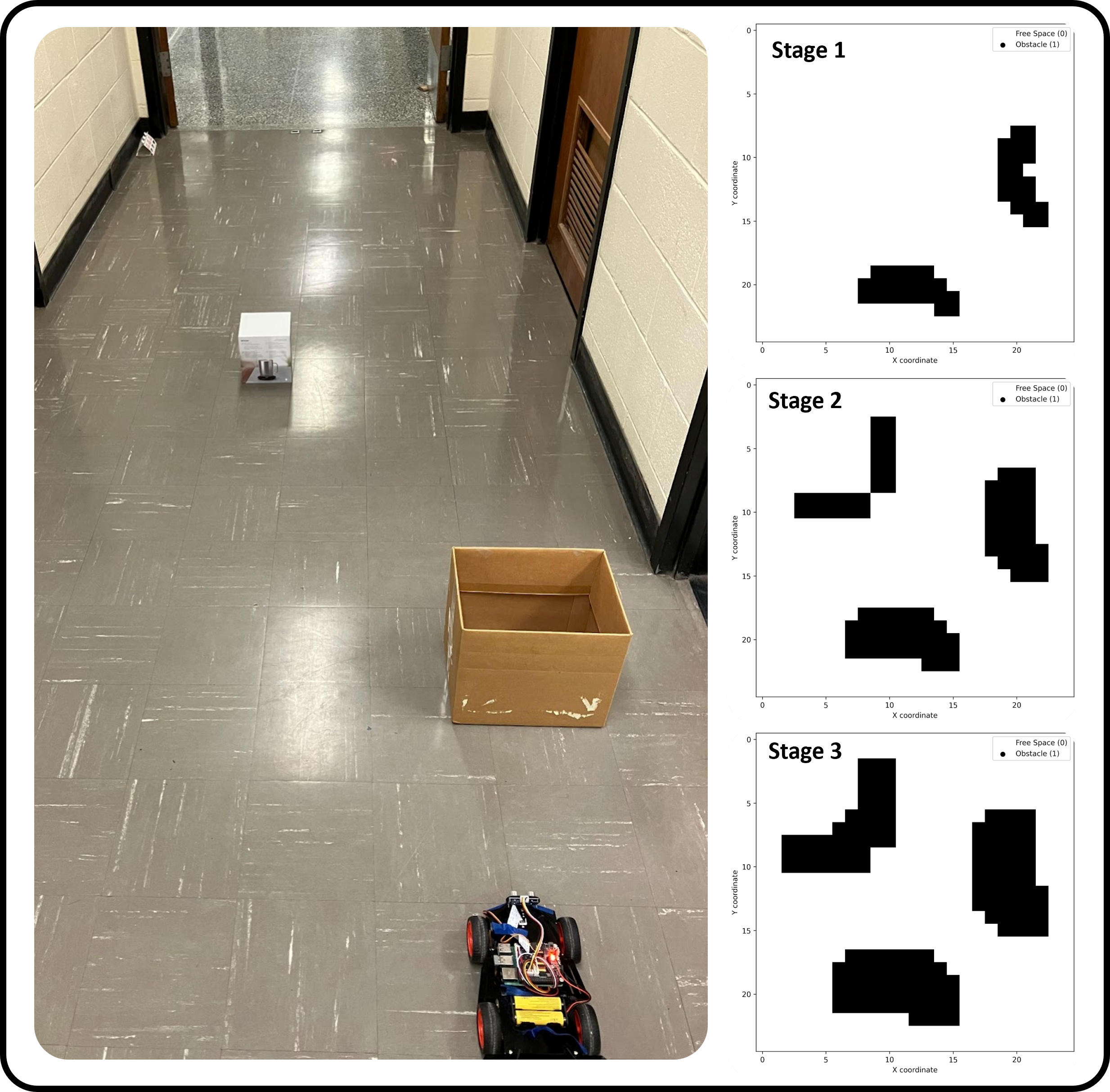

> Scenario 1: Two Obstacles with Multiple Goals

The car was tasked with reaching two sequential goal points, with the first goal positioned in front of an obstacle (labeled as obstacle 2) and the second goal behind it. As the car progressed, it first detected obstacle 1 and a wall, updating its map accordingly. Continuing along the optimal path, it detected obstacle 2, rescanned the environment, and generated an updated map. After reaching the first goal, it detected obstacle 2 again, performed another scan, and created a third map. Finally, the car successfully navigated around obstacle 2 to reach the second goal.

Figure 1. Mapping result of scenario 1

> Scenario 2: Two Obstacles in Different Positions

Similar to the first scenario but with obstacles placed in different locations, the car demonstrated its ability to adapt to varying environmental configurations. The system successfully detected both obstacles, updated its map, planned an optimal route, and reached the single goal point.

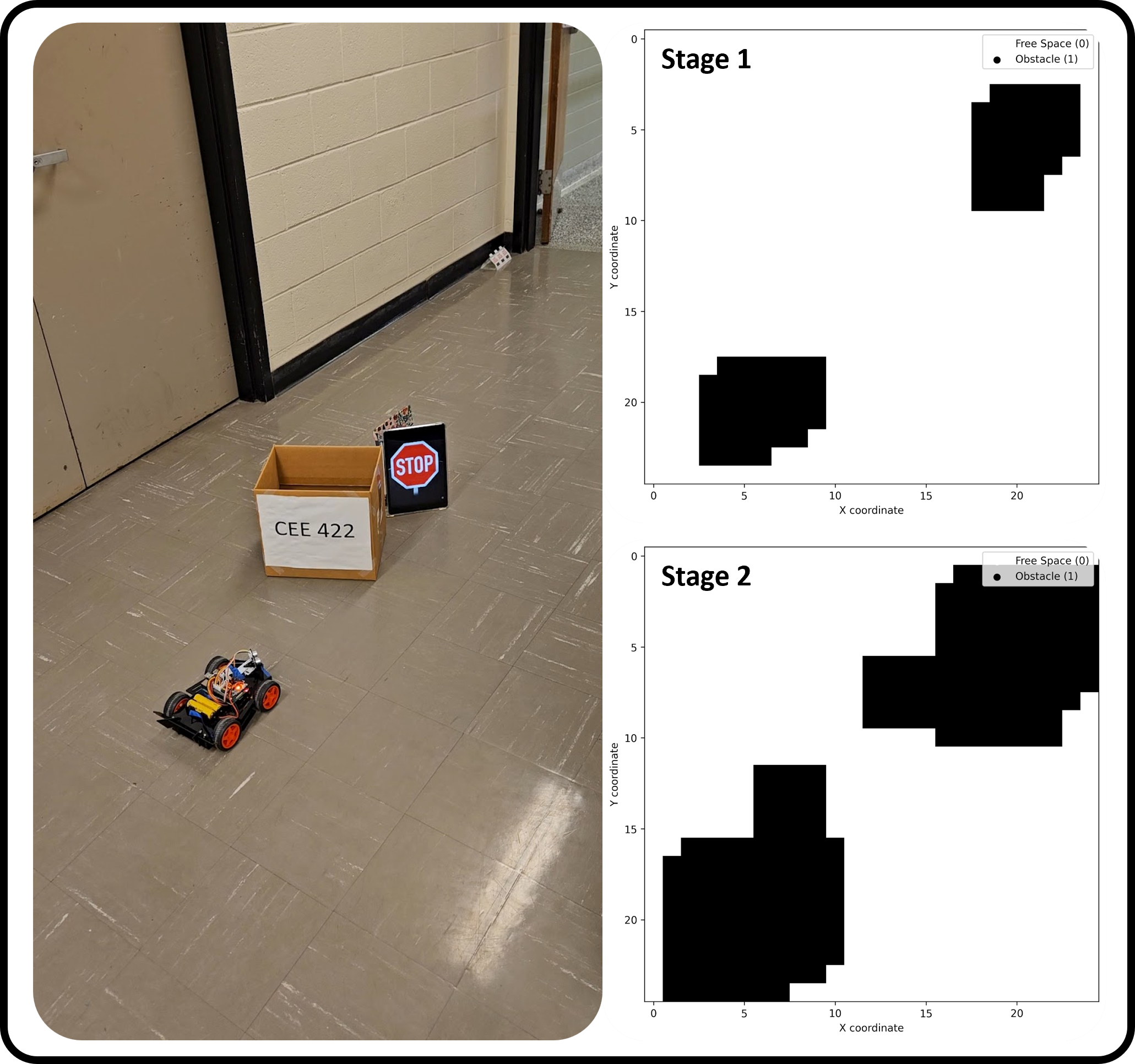

> Scenario 3: One Obstacle with Stop Sign

This scenario incorporated both physical obstacle avoidance and traffic sign recognition. The car navigated toward the goal while properly responding to a detected stop sign by halting for the required 5 seconds before continuing. The mapping system correctly identified and marked both the obstacle and the room walls.

Figure 2. Mapping result of scenario 3

> Demo Video

These results demonstrate the system's robustness in handling various obstacles, recognizing traffic signs, and dynamically replanning paths to reach designated destinations. The project successfully integrates sensing, mapping, planning, and control into a functional autonomous navigation system, showcasing the potential of low-cost robotics platforms for complex autonomous tasks.

Project Code

> For the whole project code, please visit: https://github.com/htliang517/RaspberryPiCar